I am a student in the College of Arts and Sciences at Cornell University studying computer science and marine biology. In my free time, I enjoy coding projects (currently a lot of GPU projects), fishkeeping (really anything coral-related), photography, playing games with friends, and playing soccer.

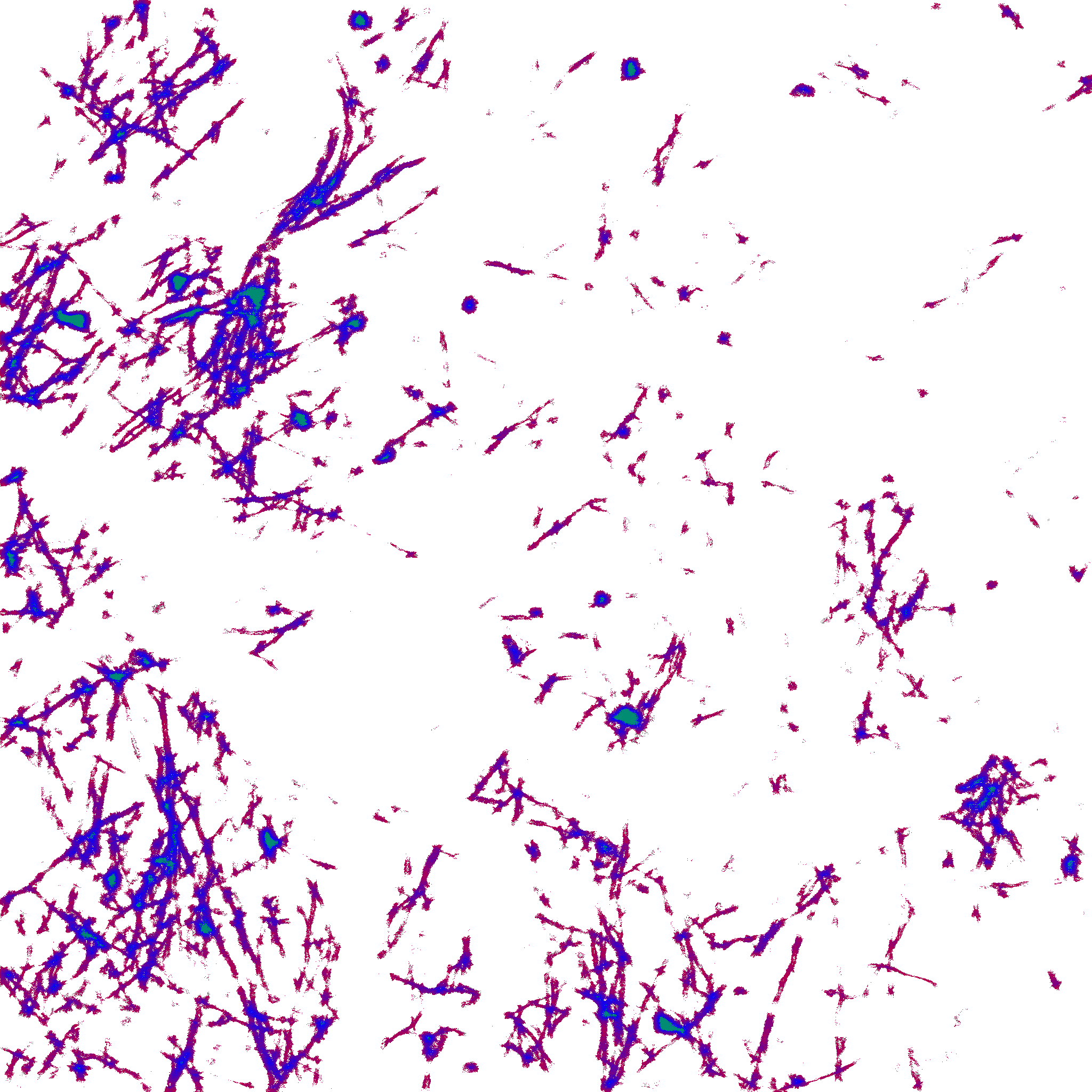

I have been interning in Professor Robert Hill's Neurobiology Lab at Dartmouth College for the past three summers. My first summer, I learned the ins and outs of the wet lab. In my free time I created a denoising algorithm, that runs on the GPU of course, that isolates oligodendrocytes from image artifacts by their linear morphology. This algorithm typically takes a few seconds to run and is fairly accurate, although it requires some user input to make sure the program is using the correct weights for the image.

While my second summer in Professor Hill's lab was entirely remote, I was able to leverage my computer science background and get to work. I took on the task of optimizing the process of tracing oligodendrocytes-a time-intensive quantification of oligodendrocyte behavior and activity. I first looked into various VR tools like Vaa3D and Agave, but neither of these tools were accurate enough for our tasks. By the end of the summer, I essentially rewrote an industry standard tracing software (Simple Neurite Tracer) to run entire on the GPU for better performance.

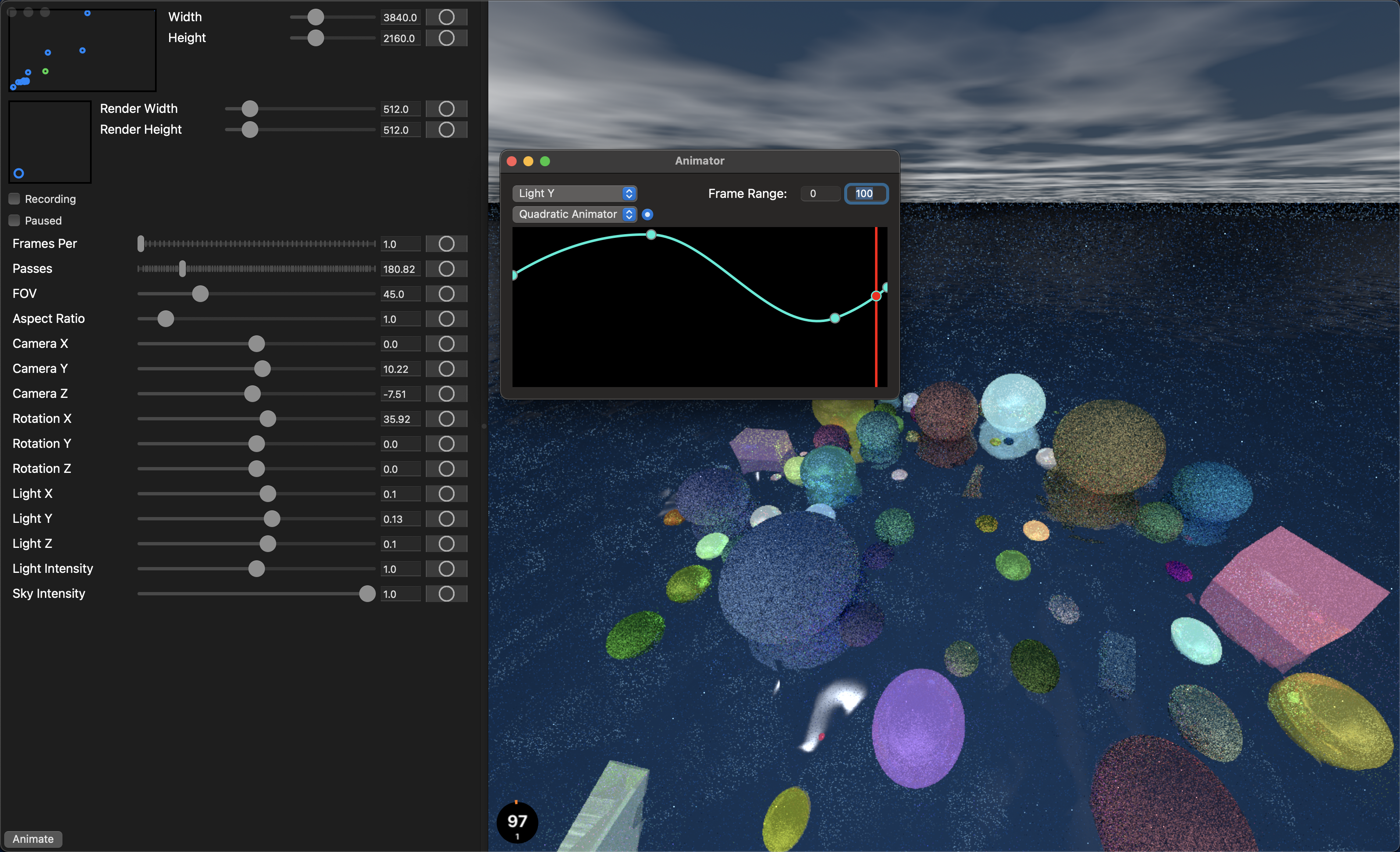

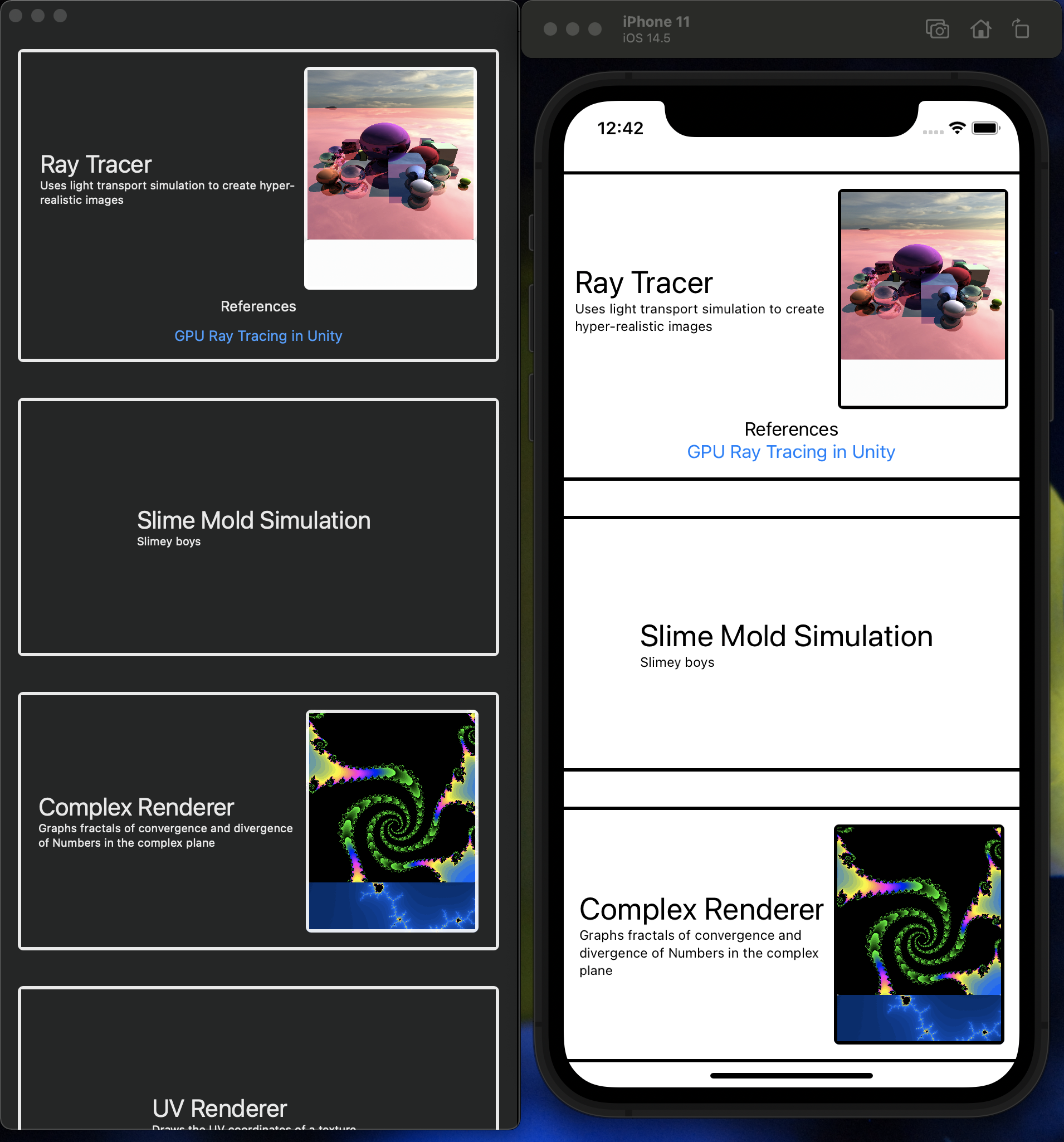

As you will see below, I have had quite a number of endeavors into graphics (with many more to come). These projects require quite a lot of boiler-plate to get going and I have wasted too much time getting basic things to work instead of playing around with fun concepts. So, I built a project with as much abstraction as possible, so that I can re-use boiler-plate and get right into the meat of a problem. In addition to graphics boiler-plate, this project also includes input management, input animation, and image-capture for recording crispy videos. I could switch to unity and get significantly better input control (without animations), which I will probably do rather soon. But for now, I have had a blast writing code with MetalKit. Below, you will see a video I compiled that is a summary of my endeavors into fun coding projects. Leading up to the title sequence is a montage of a bunch of projects I have done over the years. In conjunction with Graphics Hub, I also built a Hackintosh. This allowed me to really delve into the world of graphics and everything after the title sequence, is something I recorded using Graphics Hub. Enjoy!

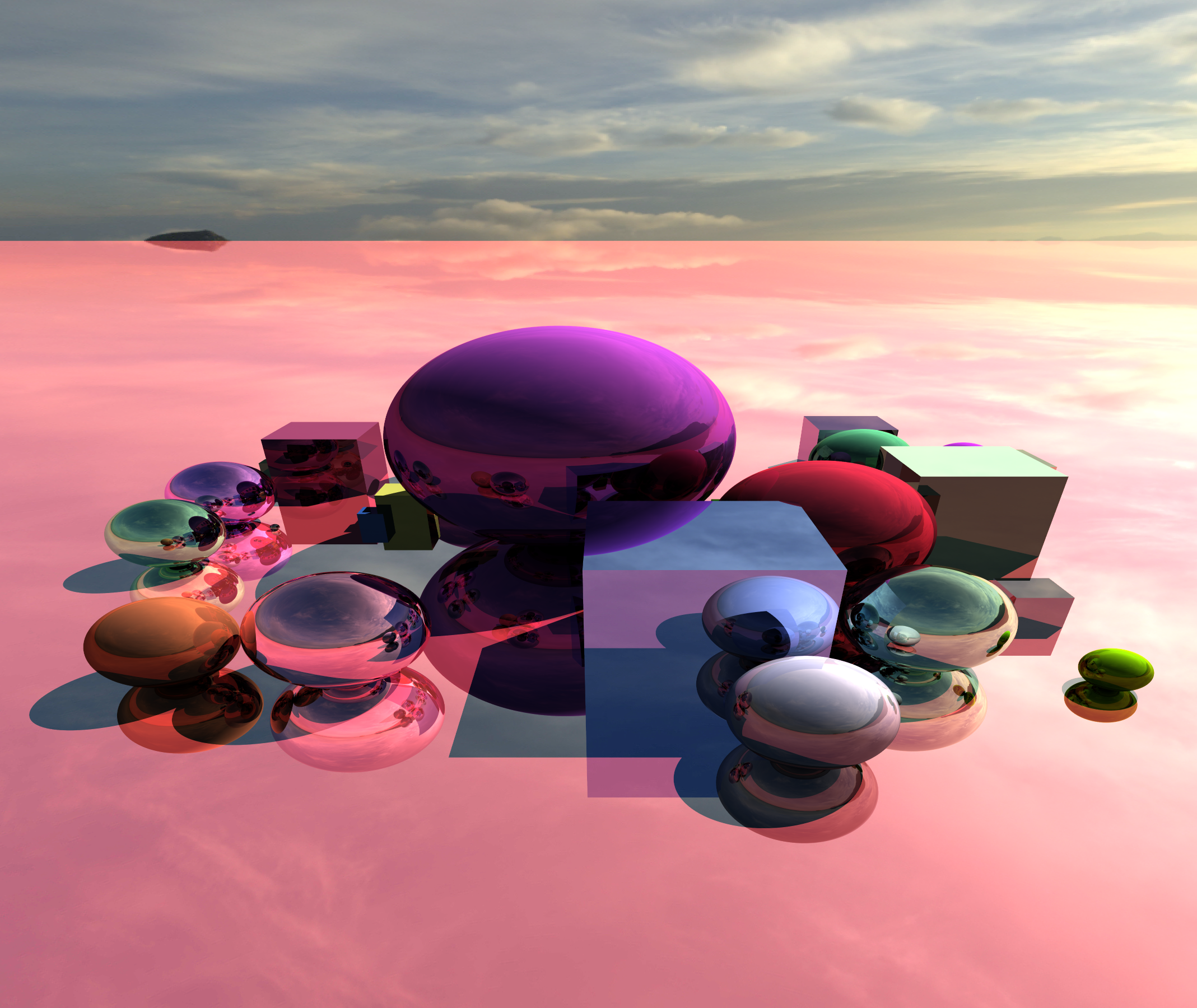

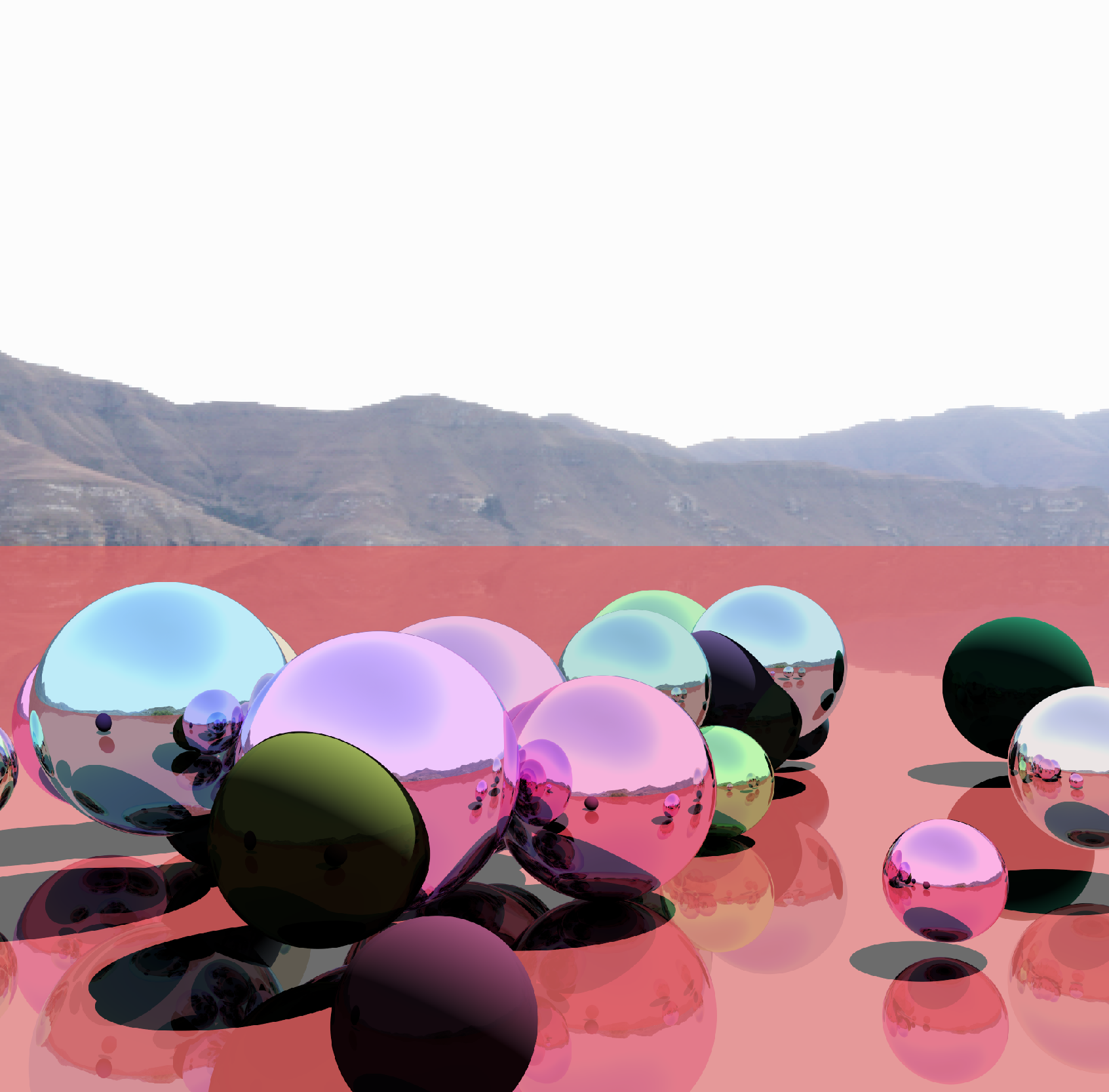

It's time to put my ray tracing skills to use! At the moment I am upgrading to a ray marching renderer, but I would like to create some animations soon (hopefully a Curl Noise visualizer)!

This was another project inspired by one of Sebastian Lague's Youtube videos. For those who don't know, ray tracing is a method of rendering a 3D scene by casting light rays from a camera to the scene. As the light bounces off of materials, it is refracted based upon the material properties of the objects-picking up color and reflecting off of surfaces. Doing the same from the light source (instead of the camera) to the scene can render shadows as well! This project had some significant obstacles for me because I used a tutorial meant for Unity, which has some nice tools that the Apple Environment doesn't have yet (or I haven't found them yet). I spent a while understand 4x4 camera projection matrices and mapping rays to a 3D image. Currently, my project renders refraction, reflection, and shadows for a scene with a plane and randomly generated spheres. I am working on adding triangular faces as well because then I can add any 3D model I want-the math is a little tricky though, and I want to figure it out instead of looking it up... The project runs in real-time, but it dops frames after the image gets larger than 512x512. Also, I added antialiasing to smooth the images, but this feature only works on images (nothing with high refresh rates) because it just isn't feasible. With this image-focused mode, I can expand the project to incorporate a lot more material properties, which require a high number of passes before properly rendering.

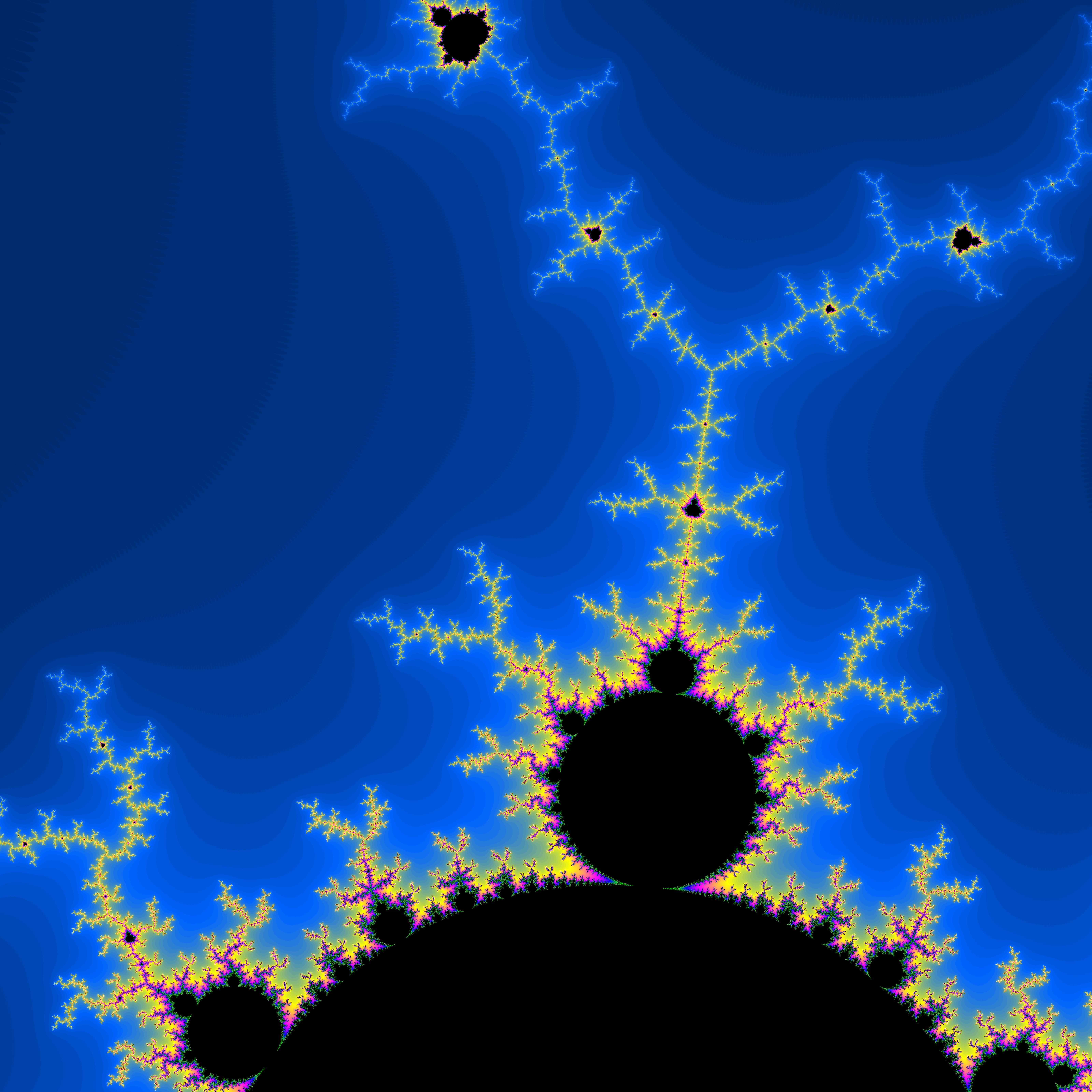

(This is about to get a tad cheesy, and I apologize in advance). When first learning about complex numbers, my math teacher was trying to explain some importance to the seemingly useless concept and pointed to our class textbook with some gorgeous fractals and said that it was completely based on complex numbers. That moment gave me such a greater appreciation of these designs that I had overlooked on essentially all of my math textbooks. Since then, I have wanted to generate my own fractals, but I had never had the means to do so before I learned to code. After learning about compute shaders with Boids, I could not wait to tackle this project. I spent countless hours randomly generating images and playing around with the colors. As I learned more about GPU's I changed the project from rendering individual images to rendering animatable fractals from which I saved tons of photos and videos-They're so pretty! This is probably one of my favorite projects, and I love to revisit it. I also can't stop showing off the images, hence the backgrounds to the website :).

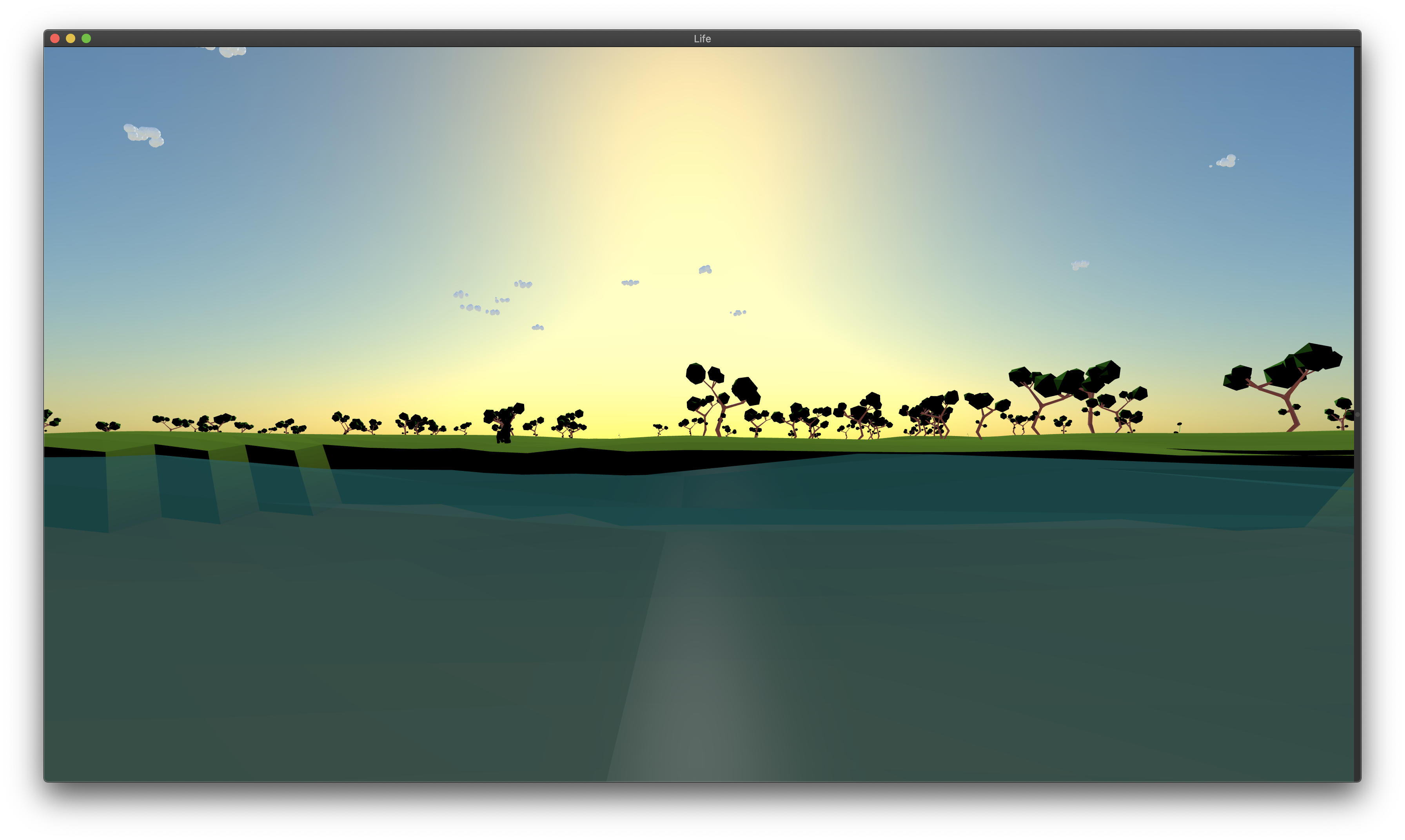

This was a school project a friend and I made for March Intensive (essentially a project week). Inspired by Sebastian Lague and Primer's exploration of evolution through ecosystem simulations, we set out to make our own rendition. We used Scenekit for our game engine, blender for modeling, and Github for source control. It was my first time working with someone else on a major project. It was somewhat annoying with XCode's source control, but ultimately it was an enjoyable and helpful process. In the end, we were able to add a thriving population of bunnies. We also successfully added a species of bird with some uncanny valley, because we did not animate their movements-they just kind of glide everywhere. There are also foxes, but they definitely need some work to not decimate the bunny population. I had an absolute blast playing with shaders for the water, trees, and cloud movement, and I think they look absolutely stellar. There are three basic food groups, growing plants (berry bushes and cactus fruits that will replenish their food after some time-shown with some crispy animation), apples, which fall from trees, and meats–which come from predation. In the future, I hope to remake this project in a GPU-driven model to fix some difficulties with movement, performance, and add nice features like ray tracing. There are some precursors in the project like some Perlin noise terrain generation, but it is far from finished.

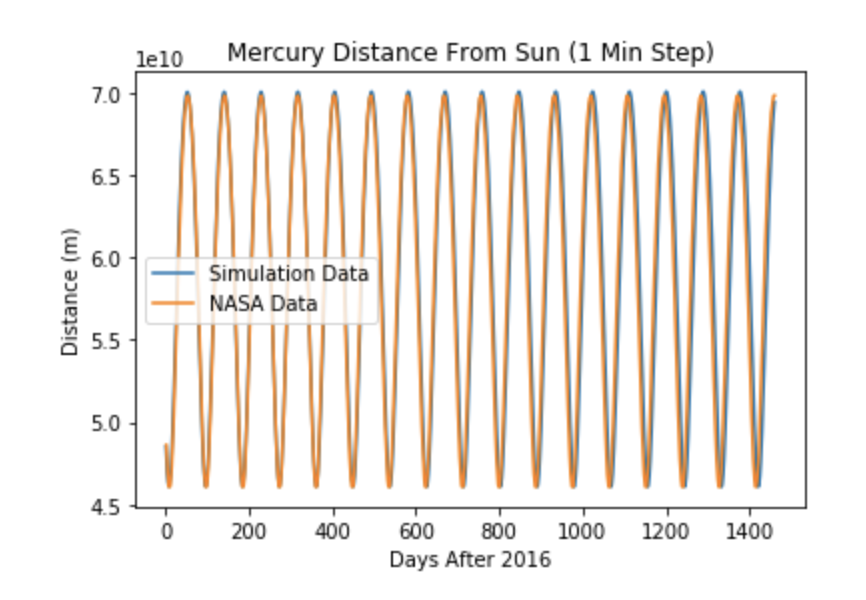

I decided to make a solar system for my final project in my physics class. Using the Runge-Kutta Method as well as scraping information from two NASA website, I was able to 2D and 3D simulations. These simulations worked really well, averaging 1% error over quite lengthy simulations, but they slowly diverged from the projections. This was probably due to rounding errors in the data and program. I had a blast doing this project, and I will definitely revisit it in the future!

My first ever project on the GPU was a Sebastian Lague inspired-Boids simulation (seen on the right). The Boids algorithm is a simple method to approximate flocks. Lague's video was fantastic, and I really wanted my own boid simulation. I played around with Unity, but I wanted to keep using Swift. After a few infinite loops on the GPU and many computer crashes, I figured it out. The collision with obstacles is done on the CPU and dramatically slows the program, so I don't use that very much. The code isn't very nice, but for my first GPU project, I am super happy with it. It opened up so many new project ideas and was so fun to do-while albeit quite frustrating. The second video (on the left) is Conway's Game of Life done on the GPU-a simulation, which approximates cell cultures upon the density of cells. For one of the computer science classes in high school, we had to build Conway's Game of Life in Python. My Python of version was extremely slow and I wanted to speed it up, so I could have a more robust simulation. I did this project while mulling over my ray tracing project, so I tried to incorporate a few more powerful features into this project, and I learned quite a lot about thread groups and I was able to apply what I learned to all of my other GPU projects.

This was the first project I ever built. I wanted to create an app for basic utilities like reminders, a calendar (this was more of my mom's influence) multiple timers (we really needed it in the lab), etc. I based it off of Let's Build That App's Youtube Series. I never finished the project, but it was a great learning tool!